Every day, people use voice commands, like “Hey Google,” or their hands to navigate their phones. However, that’s not always possible for people with severe motor and speech disabilities.

To make Android more accessible for everyone, we’re introducing two new tools that make it easier to control your phone and communicate using facial gestures: Camera Switches and Project Activate. Built with feedback from people who use alternative communication technology, both of these tools use your phone’s front-facing camera and machine learning technology to detect your face and eye gestures. We’ve also expanded our existing accessibility tool, Lookout, so people who are blind or low-vision can get more things done quickly and easily.

Camera Switches: navigate Android with facial gestures

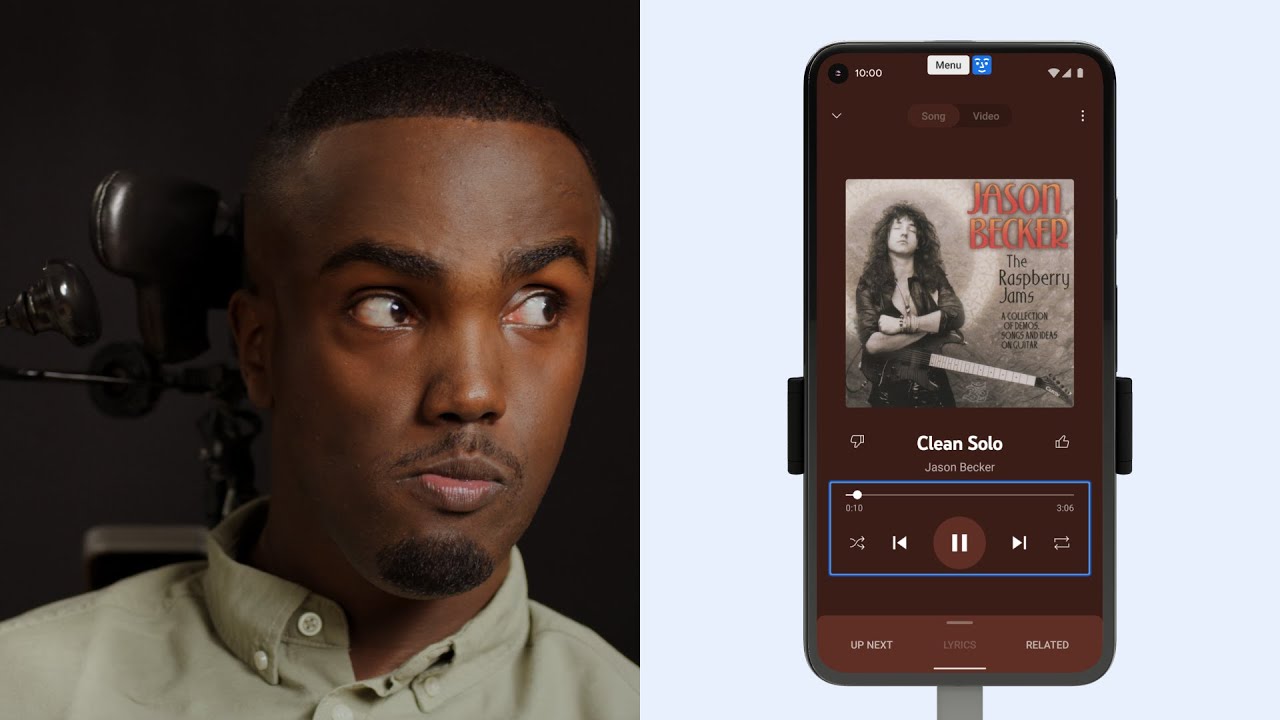

In 2015, we launched Switch Access for Android, which lets people with limited dexterity navigate their devices more easily using adaptive buttons called physical switches. Camera Switches, a new feature in Switch Access, turns your phone’s camera into a new type of switch that detects facial gestures. Now it’s possible for anyone to use eye movements and facial gestures to navigate their phone — sans hands and voice! Camera Switches begins rolling out within the Android Accessibility Suite this week and will be fully available by the end of the month. .

You can choose from one of six gestures — look right, look left, look up, smile, raise eyebrows or open your mouth — to scan and select on your phone. There are different scanning methods you can choose from — so no matter your experience with switch scanning, you can move between items on your screen with ease. You can also assign gestures to open notifications, jump back to the home screen or pause gesture detection. Camera Switches can be used in tandem with physical switches.

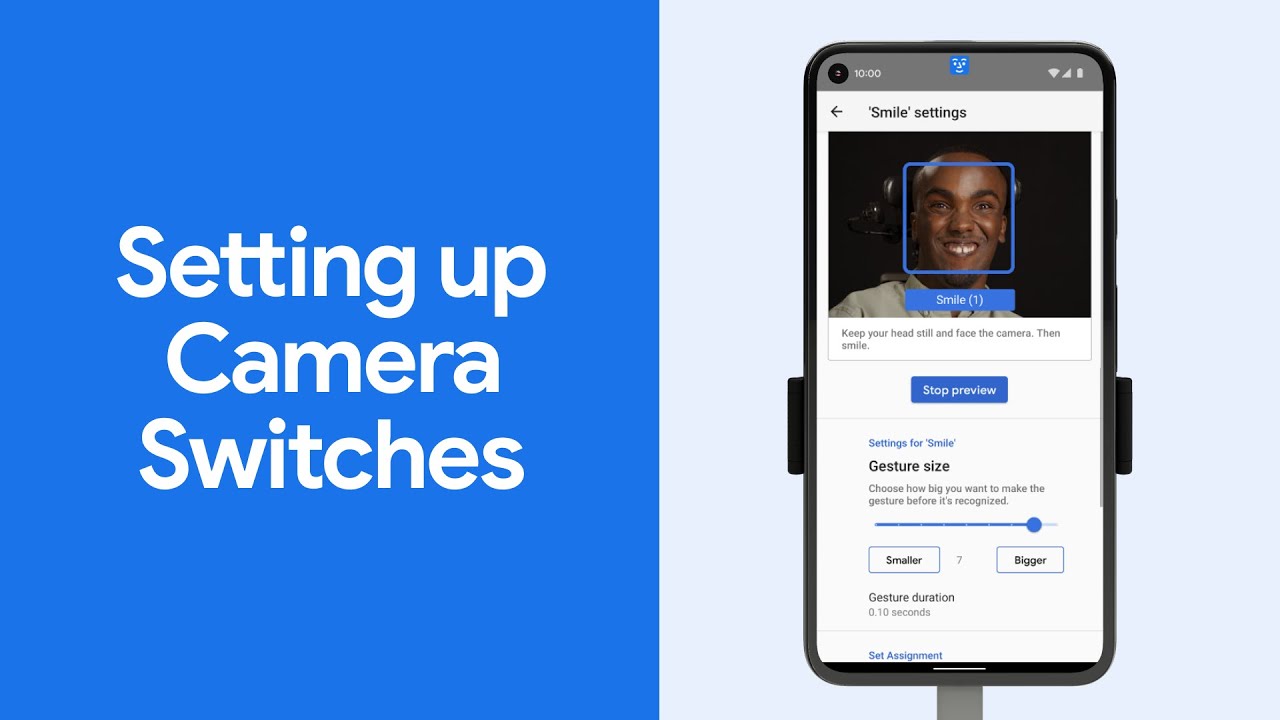

We heard from people who have varying speech and motor impairments that customization options would be critical. With Camera Switches, you or a caregiver can select how long to hold a gesture and how big it has to be to be detected. You can use the test screen to confirm what works best for you.

An individual and their caregiver customize Camera Switches. The set up process, shown through a finger on the screen, showcases customization for the size of gestures and assigning the gesture to a scanning action.

To get started, head to the Android Accessibility settings on your Android phone under Switch Access or download the app. For more information go to g.co/cameraswitches.

Project Activate: making communication more accessible

Project Activate, a new Android application, lets people use these same facial gestures to quickly activate customized actions with a single gesture — like speaking a preset phrase, sending a text, making a phone call or playing audio.

To understand how face gestures could allow for communication and personal expression, we worked with numerous people with motor and speech impairments and their caregivers. Darren Gabbert is an expert at using assistive technology and communicates using a speech-generating device. He uses physical switches to type letters that his computer speaks aloud. It’s a slow process that makes fully participating in conversations difficult. With Project Activate, Darren has a quick and portable way to respond in the moment — using just his phone. He can answer yes or no to questions, ask for a minute to type something into his speech-generating device, or shoot a text to his wife asking her to come in from another room.

Customization is built into all areas of the application — from the particular actions you’d like to trigger, to the facial gestures you want to use, to how sensitive the application is to your facial gestures. So whatever your facial mobility, you can use Project Activate to express yourself.

Project Activate is available in the U.S., U.K., Canada, and Australia in English and can be downloaded from the Google Play store.

Lookout: Expanding to new currencies and modes

We’re always updating our accessibility features and tools so that more people can benefit. In 2019, we launched Lookout for people who are blind or low-vision. Using a person’s smartphone camera, Lookout recognizes objects and text in the physical world and announces them aloud. Lookout has several modes to make a variety of everyday tasks easier — from identifying food products to describing objects in your surroundings.

Last year, we introduced Documents mode for capturing text on a page. Starting today, Documents mode can now read handwritten text, including sticky notes and birthday cards from friends and family. Lookout supports handwriting in Latin-based languages, with more coming. Additionally, with more people around the world discovering Lookout, we’ve expanded Currency mode to recognize Euros and Indian Rupees, with more currencies on the way.

Building a more accessible Android

We believe in building truly helpful products with and for people with disabilities and hope these features can make Android even more accessible. If you have questions on how these features can be helpful, visit our Help Center, connect with our Disability Support team or learn more about our accessibility products on Android.

New accessibility features let you use facial gestures to navigate your phone and read handwritten notes aloud.

Website: LINK