5.5 Using the discriminator to improve data quality

At this point, I started struggling with new programmatic ways to improve the dataset quality. New rule-based approaches had low precision, filtering out too many good emotes along with the bad. However, there was still a non-trivial proportion of low quality emotes, far too many to remove by hand. The goal was to build a more complex system to be able to detect these and remove them from the training dataset. While coming up with ideas, I realized that I already had something that could work for this task.

Although the GAN trained two neural networks, a discriminator and generator, only the generator was actually used. The discriminator was discarded after training, as it was no longer needed to synthesize emotes.

While training a GAN, the purpose of the discriminator is to detect which emotes come from the training set and which are generated by the generator. As such, the discriminator become very adapt at detecting “strange” images which do not match the patterns found in the majority of the training data. This is used during training to update the generator’s weight to produce better quality images.

I took the training dataset and fed every emote through the trained discriminator. The output of this was a score for each emote ranging from -100 to 100. This score indicated the discriminator’s belief that the emote came from the training dataset (100) or was generated (-100). The scores for the training emotes were roughly normally distributed, having a large center cluster and tail of outliers with both very positive and very negative scores. Interestingly, the mean score for the training emotes was only weakly positive.

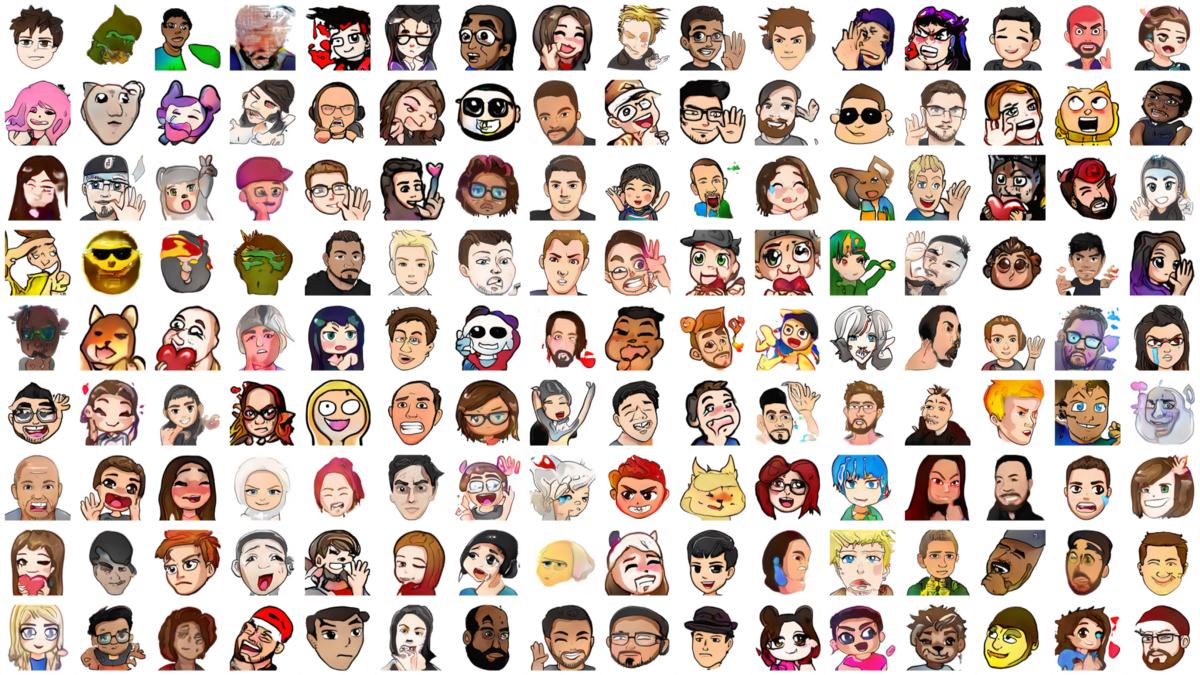

I then visually inspected the emotes which were scored at the extreme ends of this range. Training emotes which scored very low (below 0) were generally overly simplified, heavily rotated, did not have a very clear face in the image, or had text in the image that EAST had missed. These were removed from the dataset.

On the opposite side, the very high-scored emotes were also useful to remove. Although these were generally high quality faces, they were plagued by another problem — duplication.

Each of these emotes were duplicated dozens of times in the dataset. As discussed prior, this duplication caused training to drastically over-weight the features from these emotes. This could be observed in the generated emotes from the last training run.

The reason that these were not able to be filtered out during the previous image-deduplication was that they were uploaded many days apart. As such, their drastically different emote_ids would fall outside of the 1000 emote width window function when the duplication detection code was run. These were also removed from the dataset and training was re-run.

I wonder if this approach of leveraging the trained discriminator to provide useful work outside of the training backpropagation step could be applied more broadly for other aspects of GAN training. One potential use of the trained discriminator is automatically adjusting the composition of the data during training in order to speed GAN convergence. Being able to identify which specific training examples are providing most of the “learning” would allow you to dis-proportionally feed these though the GAN, potentially improving the training rate.

At this point, I felt as though the improvements in generated emote quality had slowed enough that small iterative changes was unlikely to lead to further realism. As such, I chose to move on and create new GAN training datasets using the lessons learned from trying to synthesize realistic faces.

Website: LINK