DeepWay helps the visually impaired navigate with a tap

— August 20th, 2018

In order to help those with visual impairments navigate streets, college student Satinder Singh has come up with an innovative solution that literally pokes the user in the right direction.

Singh’s system, called DeepWay, uses a chest-mounted camera to take images of the road that a wearer is walking down, then feeds this information to a laptop for processing.

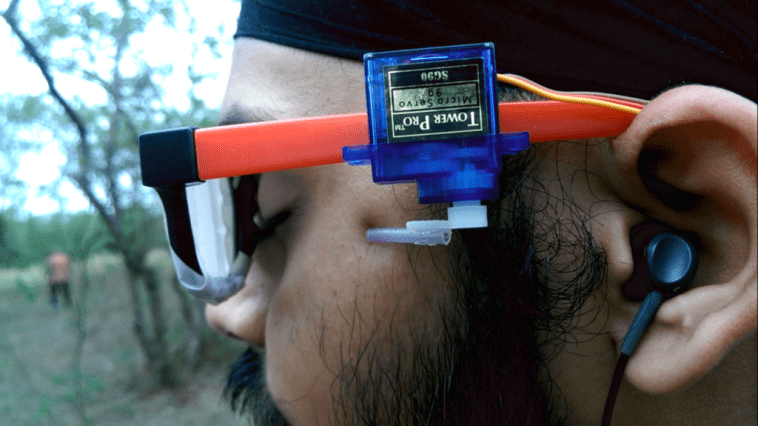

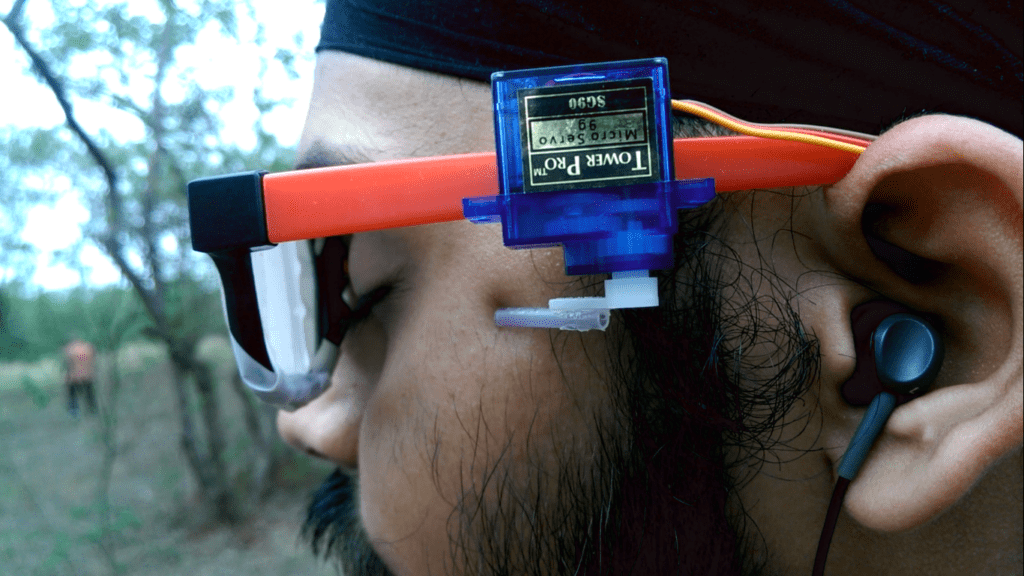

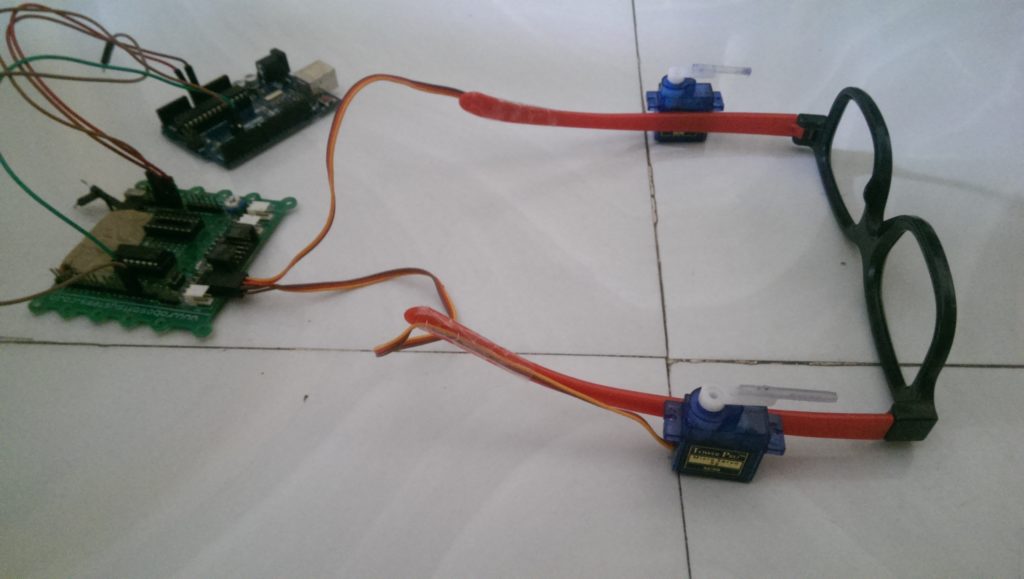

If the deep learning algorithm determines that the user needs to move left or right to stay on the path, a serial signal is sent to an Arduino Uno, which in turn commands one of two servos mounted to a pair of glasses to tap the person to indicate which way to walk. Additional environmental feedback is provided through a pair of earphones.

This project is an aid to the blind. Till date there has been no technological advancement in the way the blind navigate. So I have used deep learning particularly convolutional neural networks so that they can navigate through the streets.

My project is an implementation of CNNs, and we all know that they require a large amount of training data. So the first obstruction in my way was a correclty labeled dataset of images. So I went around my college and recorded a lot of videos (of all types of roads and also off-roads). Then I wrote a basic Python script to save images from the video (I saved 1 image out of every 5 frames, because the consecutive frame are almost identical). I collected almost 10,000 such images almost 3,300 for each class (i.e. left right and center).

I made a collection of CNN architectures and trained the model. Then I evaluated the performance of all the models and chose the one with the best accuracy. I got a training accuracy of about 97%. I got roughly same accuracy for all the trained model but I realized that the model in which implemented regularization performed better on the test set.

- Werbung -The next problem was how can I tell the blind people in which direction to move. So I connected my Python program to an Arduino. I connected the servo motors to Arduino and fixed the servo motors to the sides of a spectacle. Using serial communication I can tell the Arduino which servo motor to move which would then press to one side of the blind person’s head and would indicate him in which direction to move.

A demo of DeepWay can be seen in the video below, while code for this open source project is available on GitHub.

Website: LINK