People with visual impairments also enjoy going out to a restaurant for a nice meal, which is why it is common for wait staff to place the salt and pepper shakes in a consistent fashion: salt on the right and pepper on the left. That helps visually impaired diners quickly find the spice they’re looking for and a similar arrangement works for utensils. But what about after the diner sets down a utensil in the middle of a meal? The ForkLocator is an AI system that can help them locate the utensil again.

This is a wearable device meant for people with visual impairments. It uses object recognition and haptic cues to help the user locate their fork. The current prototype, built by Revoxdyna, only works with forks. But it would be possible to expand the system to work with the full range of utensils. Haptic cues come from four servo motors, which prod the user’s arm to indicate the direction in which they should move their hand to find the fork.

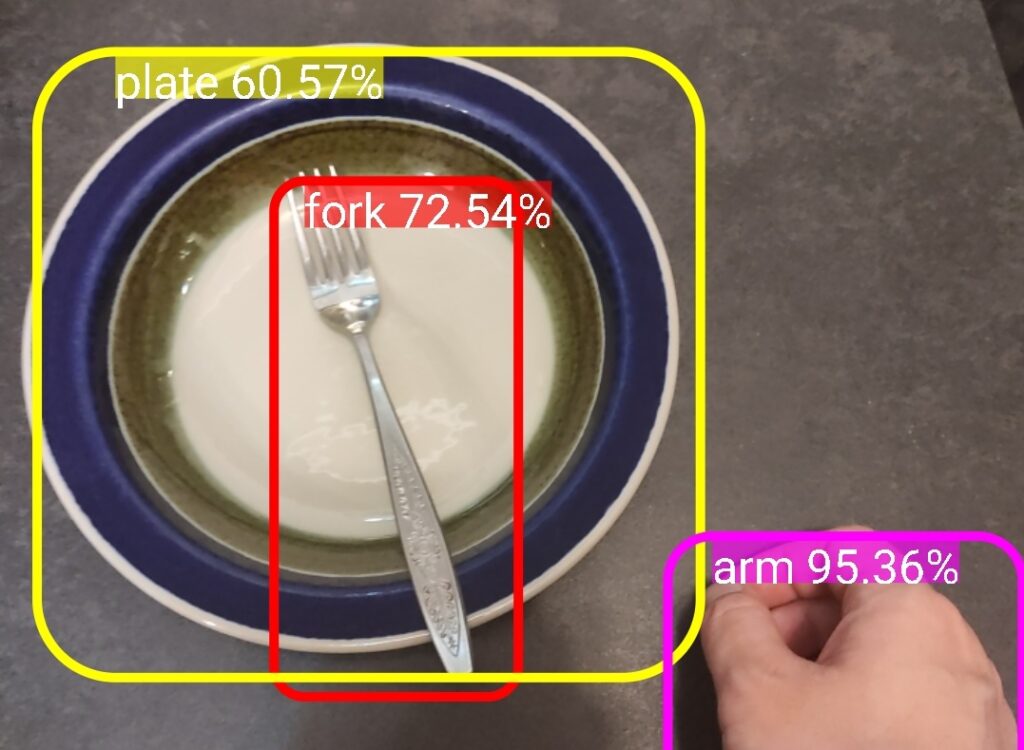

The user’s smartphone performs the object recognition and should be worn or positioned in such a way that its camera faces the table. The smartphone app looks for the plate, the fork, and the user’s hand. It then calculates a vector from the hand to the fork and tells an Arduino board to actuate the servo motors corresponding to that direction. Those servos and the Arduino attach to a 3D-printed frame that straps to the user’s upper arm.

A lot more development is necessary before a system like the ForkLocator would be ready for the consumer market, but the accessibility benefits are something to applaud.

The post This AI system helps visually impaired people locate dining utensils appeared first on Arduino Blog.

Website: LINK