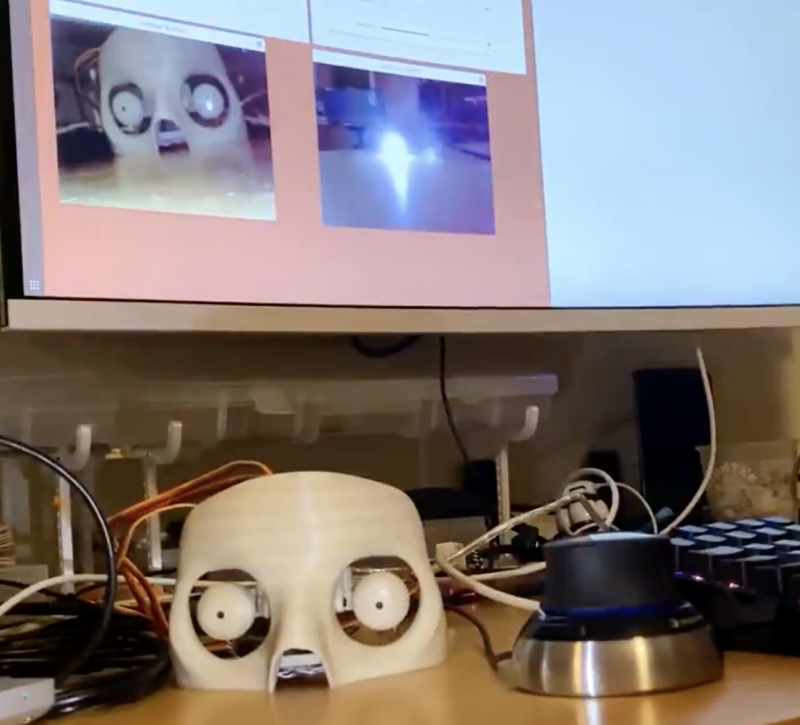

“In the movie, there’s a camera on a stalk which is aimed at the subject’s eyeball, and a monitor showing that eye isolated and magnified. My concept was to have a high-resolution, wide-angle camera, and use the face tracking code to crop and zoom in to any eye it detected. Anyone approaching the machine to look at it would be stared back at by their own eye.”

Animated response

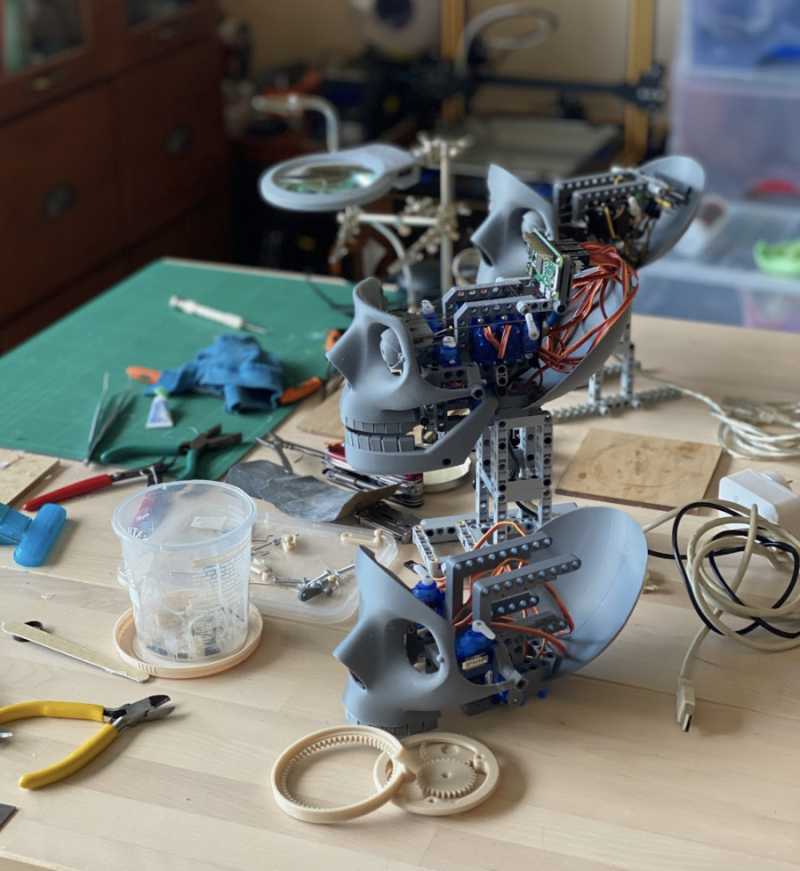

James knew immediately that he wanted to use Raspberry Pi Pico for his VK-Pocket camera project. Moreover, composite video out, which Pico supports, was essential for driving the CRT (cathode ray tube) display he culled from an old video camera. “Raspberry Pi Pico was my first choice for this build. I love these things”, he exclaims! “They’re a full Linux PC in a microcontroller form factor. I’ve put them in all sorts of builds, from animatronic heads to robotic insects.” [Yes, we want to hear more about these projects, too – Ed].

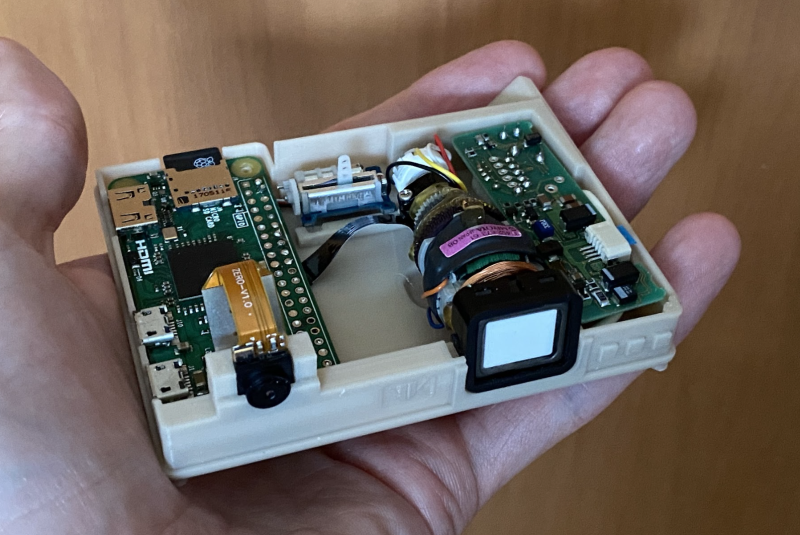

James is a stickler for details so, as well as accommodating the mini screen, camera, and Pico, it was vital that the case for the homebrew VK machine looked like the original film prop. Illustrating this is the “little servo” he added “to push some cosmetic bellows up and down,” as a nod to those in the film. There are two versions of the VK machine in Blade Runner, he explains; “the device I ended up building is a bit of a mix of both of those, in order to fit everything in.”

The servo is controlled using the pigpio library directly from a GPIO pin. Both servo and display draw less than 500 mA, and are powered from the same USB connection so they can be powered from the Pico, with no extra power source needed.

Since it was 3D-printed, James was able to experiment with a few iterations before settling on a design in which everything fits comfortably in place. Even so, he says, the control board for the display ended up at a bit of an odd angle. Putting the camera on a stalk turned out to be tricky, too, “so I put it inside the main case, looking out through a hole.”

The eyes have it

James wrote “a quite minimal” amount of Python code (magpi.cc/pieyepy) “to keep the high-res live video updated via the GPU while the CPU does the eye tracking.” He used OpenCV to detect faces with five facial ‘landmarks’, from which eye locations are taken. Although the eye-tracker appears to work in real-time, James realised it would be sufficient to have second-by-second updates. “If you wanted to get clever, you could use motion vectors from the compression hardware to improve tracking between detections, but it seemed good enough just updating every second or so.” This reduces the processor overheads and works nicely on a Pi Zero.

The Pico CPU outputs 320 × 240 images at “maybe a couple of frames per second”, while the picamera library keeps the screen updated with the live image. “The video hardware can handle 2592 × 1944 at 15 fps, and crop, scale, and display it without touching the CPU, James explains. As a result, the eye region is still reasonably detailed, even though it’s only a tiny portion of the camera’s view. “If you sit still, it locks on to your eye in less than a second, and stays well centred.”

There’s no word yet from James on whether his VK-Pocket machine actively analyses its subjects’ eyes to check whether or not they may actually be a replicant.

One Comment