— September 1st, 2021

People who suffer from physical disabilities that leave them unable to speak or communicate effectively can end up frustrated or largely ignored. In response to this issue, Hackaday users Malte, Marco, and Tim R wanted to create a small device that can turn small eye movements into simple commands and phrases for easier communication, which they call the “Speak4Me.”

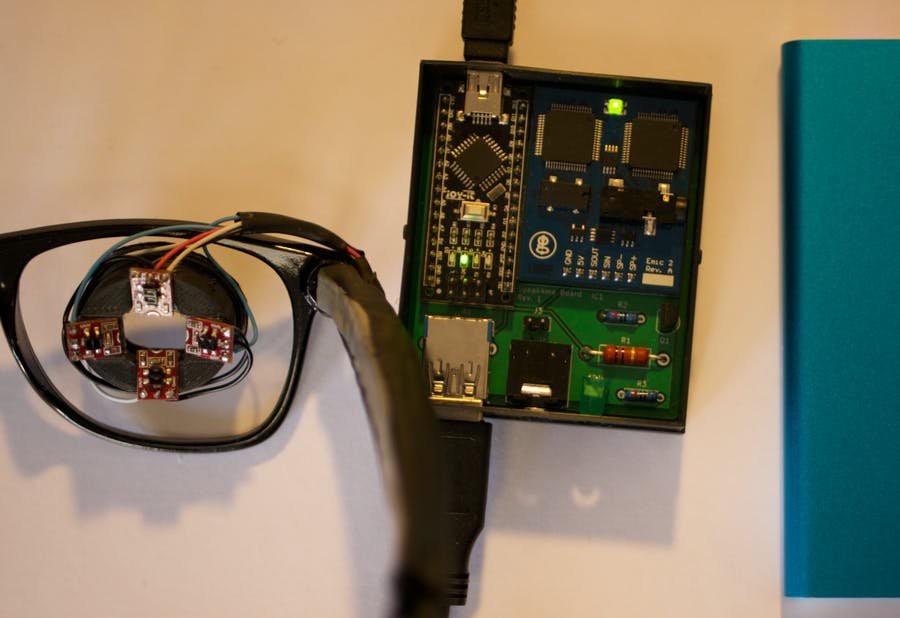

At the most basic level, the Speak4Me consists of an Arduino Nano board that controls a set of four infrared sensors which are pointed at the user’s eye within a single glass lens. Then once every 100 milliseconds, a measurement is taken to determine the location of the pupil and thus the direction being focused on. The word or phrase is chosen by first selecting a profile containing four groups of four elements each, for a total of sixteen possible combinations per profile. As an example, the caretaker profile has elements such as “yes,” “I want to sit,” and even “I need medical treatment.”

After a command has been selected, it is then sent to a Parallax Emic 2 text-to-speech device that takes in the words and produces the corresponding sounds, which are outputted via a 3.5mm audio jack.

This compact Speak4Me eye-to-speech system has great potential, and you can read more about the project here on Hackaday.io.

Website: LINK